By Grace Stanley

Appointed in the fall of 2025, Harald Haraldsson is an associate professor of the practice in design tech at Cornell Tech and the Cornell University College of Architecture, Art, and Planning. He directs the XR Collaboratory, a hands-on lab focused on multimodal 3D user interfaces for virtual and augmented reality (AR/VR) located in the Tata Innovation Center.

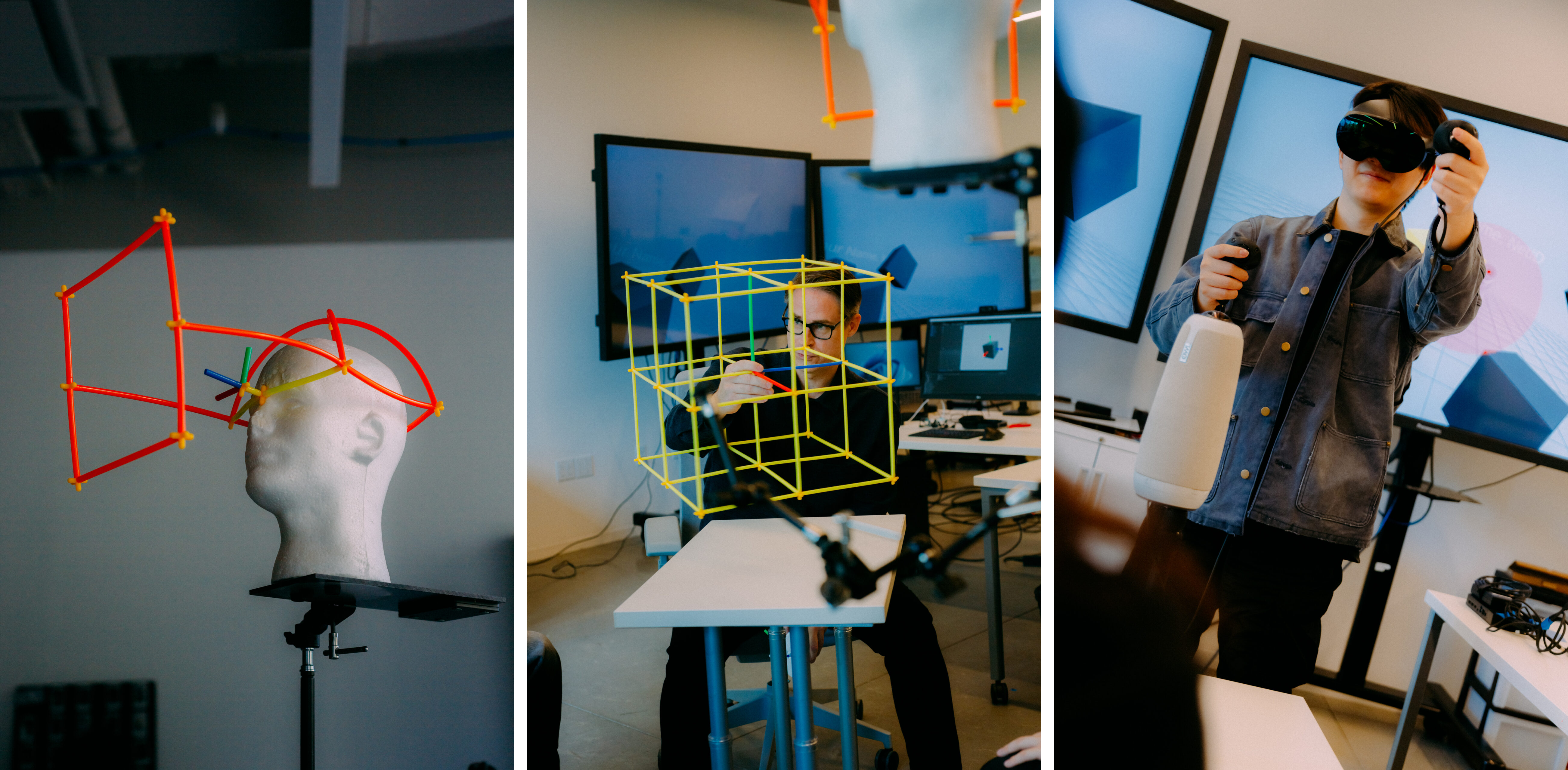

Through rapid prototyping and creative experimentation, Harald and his students explore how emerging technologies can reshape the way we interact with both digital and physical environments. We asked Harald about his vision for extended reality (XR), the lab’s unique approach to prototyping, and what excites him most about the future of immersive design.

You’ve been running the XR Collaboratory since 2018 — what inspired its initial launch, and how has it evolved over the years?

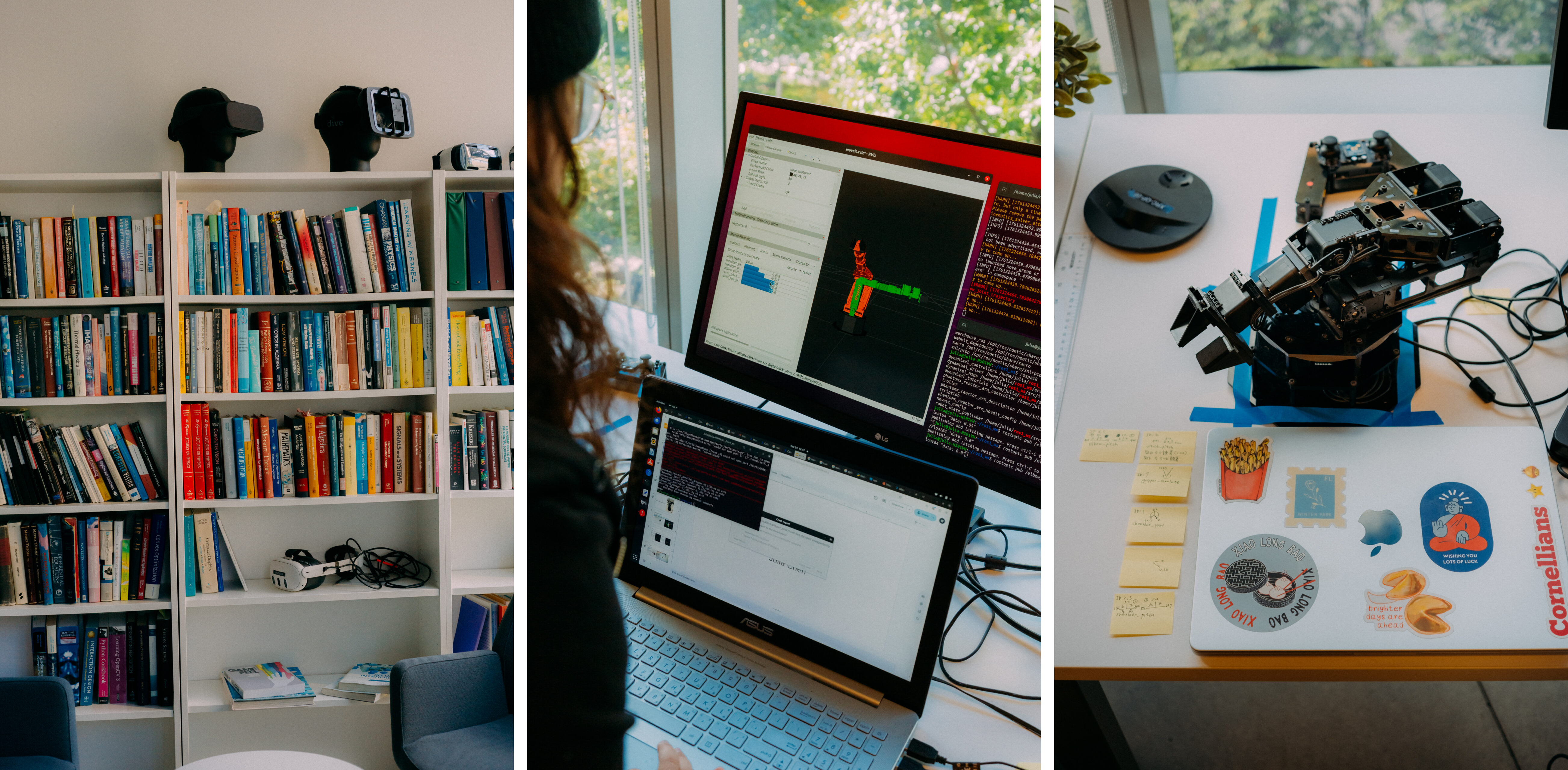

We saw growing interest in virtual and augmented reality from both students and faculty, and decided to bring the XR courses, projects, and events we were developing under a single initiative: the XR Collaboratory. Since then, the lab has evolved into a focused environment for the design and development of 3D user interfaces for AR and VR, with an emphasis on creative applications and design tools.

Throughout this process, I have been fortunate to be guided by my mentors and colleagues, professor Serge Belongie and professor Deborah Estrin. The XR Collaboratory also benefits from a strong community of XR-related labs, researchers, and collaborators across Cornell Tech, Cornell University in Ithaca, and Weill Cornell Medicine.

What makes the XR Collaboratory different from other AR/VR labs in academia?

XR Collaboratory projects are often structured around semester-long timelines and designed as hands-on, fast-paced experiences for our master’s students. Each project combines technical implementation with theoretical grounding and typically results in fully documented code, working systems, videos, and technical reports. While some student projects do lead to academic publications, publication is not the primary goal of this work.

In parallel, I lead longer-term projects within the lab and collaborate with industry partners and faculty on XR-related work that has produced outcomes in areas such as computer vision, healthcare, and digital fabrication.

How is artificial intelligence changing the way you and your students approach AR/VR design?

Artificial intelligence has become integral to our work. Today, most of our projects integrate multimodal foundation models. Designing 3D user interfaces around these non-deterministic systems introduces new challenges, but it also enables novel forms of interaction and creative exploration. This spring, we are developing projects that explore more complex workflows, including the orchestration of LLM-powered agents for 3D interaction.

The Collaboratory focuses on fast-paced prototyping — why is that important in the context of emerging technologies?

I would frame our approach as effective prototyping rather than speed for its own sake. The goal is to work efficiently, avoid redundant effort, and create foundations that others in the lab can build on. To support this, we have developed internal guidelines and a set of modular packages, known as the XRC Toolkit, which every project builds upon. In emerging technologies such as XR, design conventions are still evolving and vary across platforms and form factors. Maintaining a consistent, modular system allows small, fast-moving teams like ours to produce coherent, high-quality work over time, even as students rotate through the lab as they complete their degrees.

What kinds of multimodal interactions are you exploring, beyond the typical keyboard and mouse?

Our projects frequently combine hand tracking, gaze, and voice input. While different platforms and frameworks offer different strengths, recent advances in foundation models, along with our own toolkit work, have made multimodal prototyping far more seamless than before. I am particularly excited about our project lineup for 2026.

What drew you to Cornell Tech, and how does your creative arts background influence your approach to teaching and research?

Cornell Tech is a unique campus. It is modern, and you can sense that it was intentionally designed to support interdisciplinary collaboration. Roosevelt Island is also a special place, just one subway stop from Manhattan, while still feeling like a quiet suburb.

I have spent my career exploring technology through creative practice, including interaction design, interactive installations, and experimental video work. This background shapes how I approach my role at Cornell Tech. I believe strongly in using speculative experiments and creative prototypes to produce outcomes that can inform further research or product innovation with our collaborators.

How do your courses complement the lab’s work, and what can students expect from them?

Our courses aim to provide students with both the theoretical and technical foundations needed to contribute meaningfully to real-world XR projects. We develop a shared vocabulary and understanding by covering topics such as 3D math, human physiology and perception, and human-computer interaction, while also guiding students through the process of building functional prototypes using software tools.

What excites you most about the future of head-mounted displays and wearable interfaces?

Head-mounted displays will continue to influence professional work in design, architecture, and engineering, as well as gaming, entertainment, and media creation. The growing range of wearables, from lightweight AI-assisted eyewear to future AR systems, is shifting interface design toward more contextual and adaptive systems, an area of particular interest in my work.

We are also exploring new ways of interacting with the physical world, including projects that use multimodal 3D interfaces for augmented reality to control motors and simple robot arms.

What kind of real-world applications or societal impact do you envision for the work being done in your lab?

Many of our projects are intentionally application-agnostic, focusing on fundamental interaction techniques for tasks such as selection, manipulation, system control, and locomotion. At the same time, we explore applied domains that support creative workflows, including tools for ideation, 3D modeling, and fabrication. I see strong potential for XR systems to extend the creative process and support designers, artists, and engineers in working in more expressive and embodied ways.

Grace Stanley is the staff writer-editor for Cornell Tech.

Media Highlights

Tech Policy Press

Content Moderation, Encryption, and the LawRELATED STORIES