This Smartphone Keyboard App Can Read Your Emotions

Categories

Miscommunications via smartphones have been a running joke for nearly a decade: Mistyped and missing words, unfamiliar slang and acronyms can sometimes make for comical conversations.

But even when a message is communicated in complete sentences, we often misjudge the author’s intentions and current emotional state.

It’s this disconnect that three Cornell Tech students, Hsiao-Ching Lin, Huai-Che Lu, and Claire Opila — all graduating with Technion-Cornell Dual Masters Degrees in Connective Media in spring 2017— are aiming to solve. Their solution is an iOS or Android smartphone keyboard app called Keymochi.

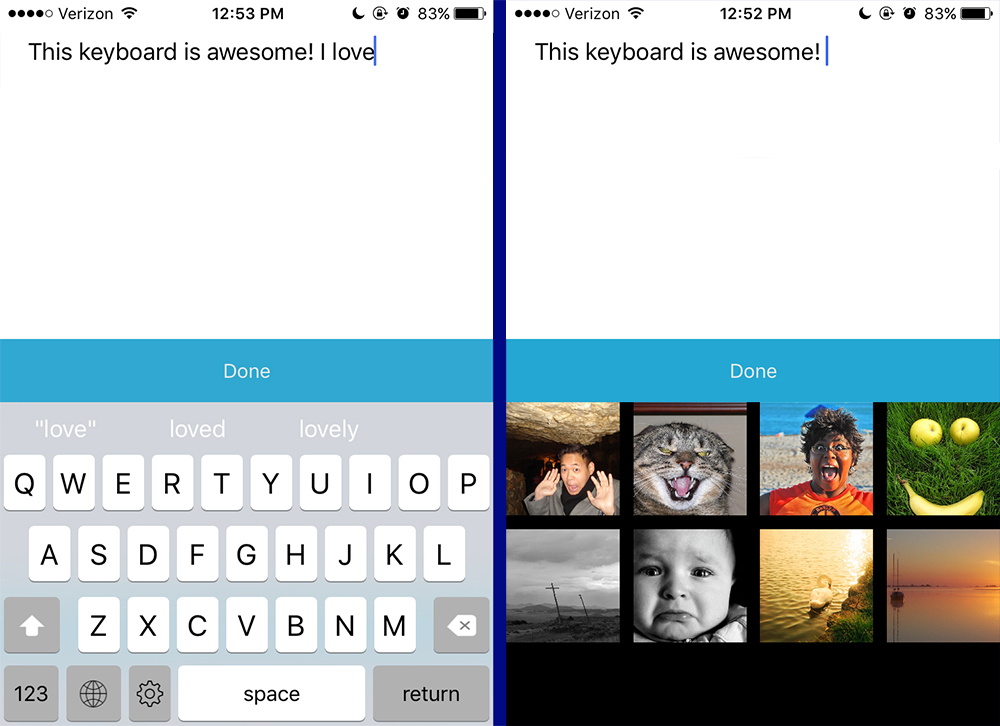

How it works

Keymochi uses data like typing speed, punctuation changes, the amount of phone movement, distance between keys, and a user’s rough sentiment analysis to detect emotions.

That means that as a user is typing out a text message or email via smartphone, each movement adds to an emotional profile of the user. In addition, users can select one of 16 pictures to indicate their mood by using a photographic affect meter, or PAM, tool.

Once the user is finished typing their message, the data is automatically encrypted and uploaded anonymously to the Keymochi database, where the team can start to build a user-specific machine-learning algorithm.

To protect privacy, Keymochi does not store what is typed, just how it is typed—the physical cues and the sentiment analysis from PAM.

“We have a rough prototype that we built last semester with exaggerated emotional data based on ourselves,” said Opila. “But now we’re going through the process of extending the technology and we’ll be conducting longer studies with other students.”

So far, the app is able to predict emotions with 82 percent accuracy.

Improving mental health

The idea for Keymochi emerged out of a desire to build a mental health-focused application that could be configured for any number of scenarios. Once they had settled on a direction, the team studied the latest research from institutions such as MIT’s Media Lab to learn more about affective computing.

“The subject is fascinating—subtle changes within someone’s facial expression can change their typing pattern,” said Lu. “After reading that, we started to look into ways to collect emotional data and develop a machine-learning algorithm.”

Because each of us have different ways of typing and forming sentences, Lu added, it would be erroneous for Keymochi to operate with a standardized set of assumptions.

“While there might be a baseline point for each user, over time each user’s unique interactions with their phone would contribute to a personalized data set,” Lu said.

Providing emotional support

Eventually, the Keymochi team hopes their app will be able to apply circumstantial information for a more accurate emotional reading.

“If someone is sitting on the subway or at their desk in an office, we’d also collect the contextual data, like the location and the time, to enhance the machine learning results,” said Lin.

This, in turn, could support other connected experiences. For example, if Keymochi detects someone is sad—when that person walks into their home, Opila noted, the lights might adjust to a more cheerful brightness or a connected device could play their favorite song.

From left to right: Claire Opila, Hsiao-Ching Lin, Huai-Che Lu

Commercial applications

Though the team had originally been developing an application for the mental health field, rigorous testing and feedback from Cornell Tech mentors and students made them consider a wider range of use cases for the application.

“We know that companies often record conversations when customer service agents talk to customers,” said Lin. “With the Keymochi keyboard, agents might be able to understand ahead of time what kind of mood the customer is in—and make recommendations for appropriate actions and dialogue during the call. At the very least, it could help a customer service agent be prepared for a difficult experience.”

Lu said he hopes that Keymochi could eventually be a customizable customer service tool, so that many different companies can use it for various needs.

“We’re thinking about creating a software development kit that developers can use for their own apps,” said Lu. “By implementing our application, it would help software companies learn more about their own customers and help them develop a better product.”

As the team continues to build out the app, one thing is for certain: Applying affective computing to everyday life represents an enormous opportunity.

“There’s a market exploding for emotionally-driven services and communications,” said Opila. “With this highly personalized system that’s tailored to the user, we can understand someone’s emotions in a variety of situations throughout the day.”

Media Highlights

Tech Policy Press

Content Moderation, Encryption, and the LawRELATED STORIES

From Milstein Scholar to Design Tech Pioneer