Cornell Tech Professors Receive $3M Million NSF Grant for Accountable Decision Systems Project

Categories

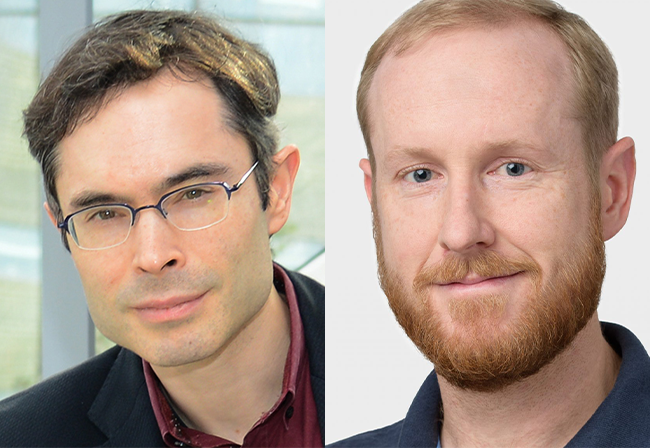

NEW YORK – Cornell Tech professor of information science Helen Nissenbaum and associate professor of computer science Thomas Ristenpart are collaborating on a new $3 million National Science Foundation project on accountable decision systems that respect privacy and fairness expectations. The project team also includes Carnegie Mellon University Cylab associate professor Anupam Datta, Matthew Fredrikson, assistant professor of computer science at CMU, Ole Mengshoel, principal systems scientist in electrical and computer engineering at CMU, and Michael C. Tschantz, senior researcher at the International Computer Science Institute in Berkeley.

Increasingly, decisions and actions affecting people’s lives are determined by automated systems processing personal data. Excitement about these systems has been accompanied by serious concerns about their opacity and threats they pose to privacy, fairness, and other values. Examples abound in real-world systems: Target’s use of predicted pregnancy status for marketing; Google’s use of health-related search queries for targeted advertising; race being associated with automated predictions of recidivism; gender affecting displayed job-related ads; race affecting displayed search ads; Boston’s Street Bump app focusing pothole repair on affluent neighborhoods; Amazon’s same day delivery being unavailable in black neighborhoods; and Facebook showing either “white” or “black” movie trailers based upon “ethnic affiliation”.

Recognizing these concerns, the project seeks to make real-world automated decision-making systems accountable for privacy and fairness by enabling them to detect and explain violations of these values. The project will explore applications in online advertising, healthcare, and criminal justice, in collaboration with domain experts.

“A key innovation of the project is to automatically account for why an automated system with artificial intelligence components exhibits behavior that is problematic for privacy or fairness,” said CMU’s Anupam Datta, project PI. “These explanations then inform fixes to the system to avoid future violations.”

Thomas Ristenpart, associate professor at Cornell Tech adds, “Unfortunately, we don’t yet understand what machine learning systems are leaking about privacy-sensitive training data sets. This project will be a great opportunity to investigate the extent to which having access to prediction functions or their parameters reveals sensitive information, and, in turn, how to improve machine learning to be more privacy friendly.”

“Committing to philosophical rigor, the project will integrate socially meaningful conceptions of privacy, fairness, and accountability into its scientific efforts,” said Helen Nissenbaum, professor at Cornell Tech, “thereby ensuring its relevance to fundamental societal challenges.”

In order to address privacy and fairness in decision systems, the team must first provide formal definitional frameworks of what privacy and fairness truly entail. These definitions must be enforceable and context-dependent, dealing with both protected information itself—like race, gender, or health information—as well as proxies for that information, so that the full scope of risks is covered.

“Although science cannot decide moral questions, given a standard from ethics, science can shed light on how to enforce it, its consequences, and how it compares to other standards, ” said Tschantz.

Another fundamental challenge the team faces is in enabling accountability while simultaneously protecting the system owners’ intellectual property, and privacy of the system’s users.

“Since accountability mechanisms require some level of access to the system, they can, unless carefully designed, leak the intellectual property of data processors and compromise the confidentiality of the training data subjects, as demonstrated in the prior work of many on the team,” said Fredrikson.

The interdisciplinary team of researchers combine the skills of experts in philosophy, ethics, machine learning, security, and privacy. Datta hopes to successfully enable accountability in automated decision systems—an achievement that would add a layer of humanity to artificially intelligent systems.

Cornell Tech’s faculty’s research efforts reflect collaboration with the New York City tech industry, from startups to established enterprises. Bolstered by our foundation in academic rigor, we nurture projects that are both visionary and reflective, simultaneously advancing theory and technical practice. Since our founding, we continue to evolve through exploration and boundary-pushing. Guided by both academic excellence and practical impact, we work determinedly to advance life within and beyond our campus. Our purpose-driven research program spurs relevant and valuable progress in five areas: Human-Computer Interaction (HCI), Security & Privacy, Artificial Intelligence, Data & Modeling and Business, Law and Policy.

About Cornell Tech

Cornell Tech brings together faculty, business leaders, tech entrepreneurs and students in a catalytic environment to produce visionary results grounded in significant needs that will reinvent the way we live in the digital age.

From 2012-2017, the campus was temporarily located in Google’s New York City building. In fall 2017, 30 world-class faculty and almost 300 graduate students moved to the first phase of Cornell Tech’s permanent campus on Roosevelt Island, continuing to conduct groundbreaking research, collaborate extensively with tech-oriented companies and organizations and pursue their own startups. When fully completed, the campus will include two million square feet of state-of-the-art buildings, over two acres of open space, and will be home to more than 2,000 graduate students and hundreds of faculty and staff.

Media Highlights

Tech Policy Press

Content Moderation, Encryption, and the LawRELATED STORIES